Context

Exposure to natural hazards scenarios is usually being assessed by potential value of damage and people affected. The current state of the art of scientific exposure models is based on census data for regions, like districts. At the German Research Centre for Geosciencies, Felix Delattre guided group of scientists and software engineers on building a system for increasing the resolution of these models to a global grid of rectangles of around 0.2 square kilometres.

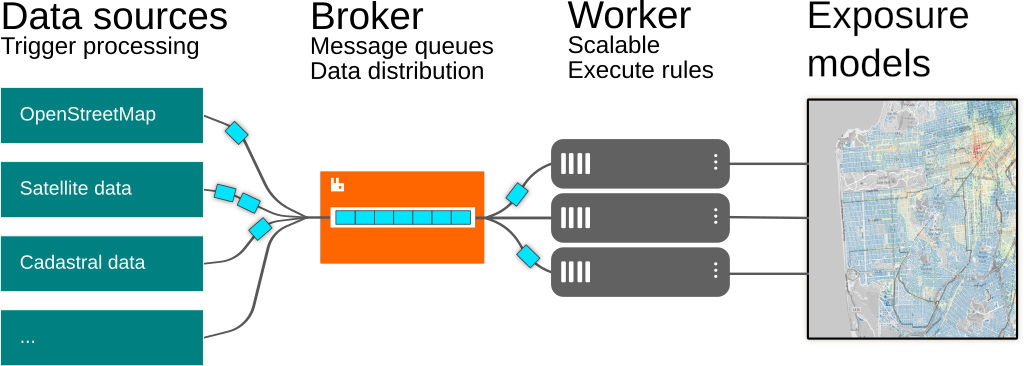

We built a big data tile-based Geographic Information System for processing global data-sources like OpenStreetMap’s buildings and earth observation data from satellites, and subsequently apply this to the creation of more detailed exposure models for better city and infrastructure planning and humanitarian response to natural hazards, such as earthquakes.

Technical components

During a two-year project-based employement, Felix guided the scientific team during the pandemic to integrate their research work through software engineering, team collaboration best-practices and processes into a computing system consisting of the following main components:

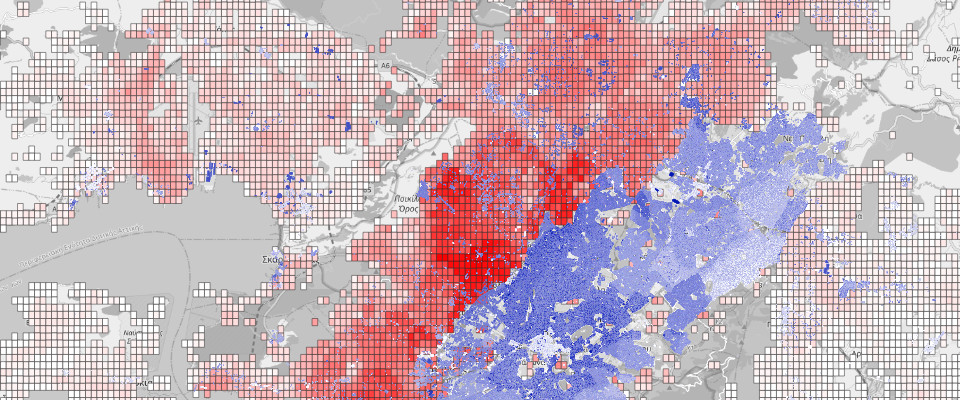

Gap Analysis: This program evaluates the completeness of buildings in OpenStreetMap. It compares the existing buildings with global high-resolution open-data settlement datasets as well as detailed remote sensing data with a local scope and categorizes the status on tiles on zoom level-18.

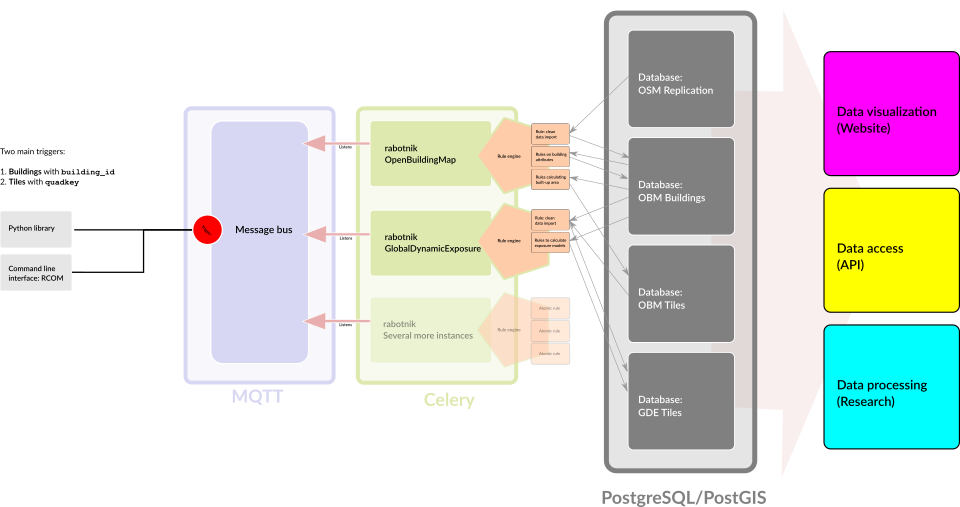

OpenStreetMap buildings import: The planet dateset from the crow-sourced mapping project is being imported into a database with imposm and filled into suitable tables for buildings, points-of-interest, roads and landuses. An extension with the name spearhead has been created to consume continuous updates from OpenStreetMap, detect building updates within it and triggers the main processing of data in the overall system.

Data processing framework: With the name rabotnik, an asynchronous and flexible data and task management ecosystem has been created. It consists in a library relying on Celery for scalable processing, both in parallel and asynchronous on a server’s resources, or over several nodes in a computing cluster. Several instances of rabotnik can be created for main processing steps, and these communicate over a central message bus realized over the Message Queuing Telemetry Transport protocol (MQTT) and has been implemented with RabbitMQ. Computations can be abstracted in atomic rules which are be dynamically added to the analysis flows in the particular instances.

Vector tile visualizations: The results of building and tile-based data processing are being visualized on the web and made available to GIS software through vector tiles. These are being generated with PostGIS’s ST_AsMVT and then served using pg_tileserv. The websites are based on JavaScript and the framework nuxtjs.

The whole software stack is based on Free and Open Source Software and all software that has been developed within the project is likewise published under AGPLv3+ license.

All work (also this text and illustrations) have been realized in collaboration with: Dr. Cecilia Nievas, Dr. Danijel Schorlemmer, Dr. Marius Kriegerowski and Nicolás García Ospina at German Research Centre for Geosciencies.